UV-CoT: unsupervised Visual Chain-of-Thought

Reasoning via Preference Optimization

-

Kesen Zhao 1

Beier Zhu 1✉

Qianru Sun 2

Hanwang Zhang 1

- 1MReal, Nanyang Technological University

- 2Singapore Management University

- ✉corresponding author

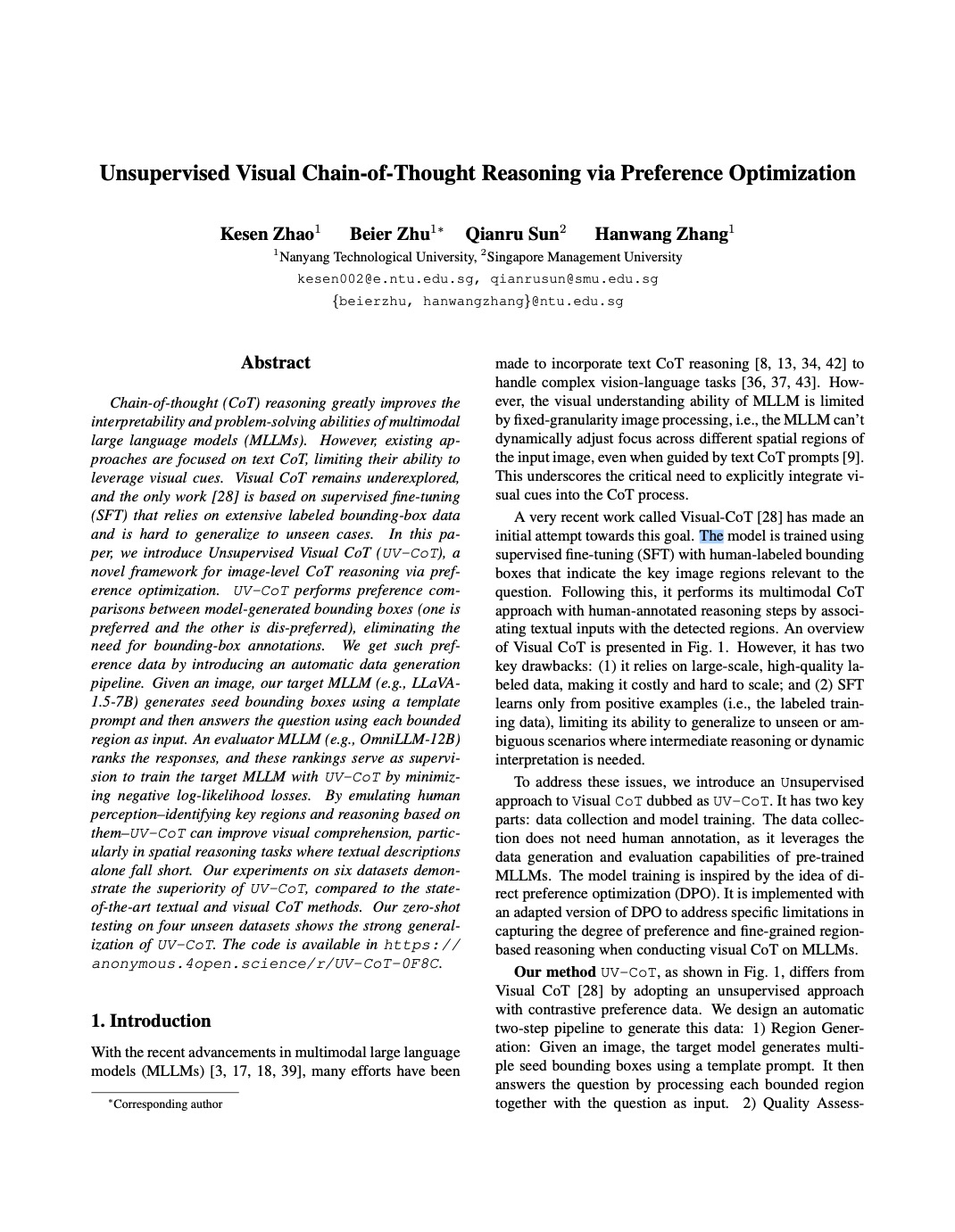

Overview of UV-CoT

|

Comparison between Visual CoT and our

Left: Visual CoT depends on human-annotated bounding boxes to identify key regions. The model is trained using supervised fine-tuning to maximize the likelihood of matching the labeled data.

Right:

UV-CoTLeft: Visual CoT depends on human-annotated bounding boxes to identify key regions. The model is trained using supervised fine-tuning to maximize the likelihood of matching the labeled data.

Right:

UV-CoT removes the need for manual annotation. Given an input image, the model automatically generates initial (seed) bounding boxes and answers questions based on these regions. An evaluator multi-modal LLM (MLLM) then scores the answers as an indirect measure of region quality. Finally, the target model is optimized via preference optimization, encouraging it to favor regions associated with better answers.

News 🎉

Our paper is accepted by ICCV 2025. You can access the lastest version of the paper at here:

[arXiv link]

Links

Visualization

Visualization of preference data generated by our method. Preferred bounding boxes are shown in red. Dis-preferred bounding boxes are in blue.

Visualization of our UV-CoT inference.

Model-generated bounding boxes are shown in red.

Citation

@misc{zhao2025unsupervisedvisualchainofthoughtreasoning,

title={Unsupervised Visual Chain-of-Thought Reasoning via Preference Optimization},

author={Kesen Zhao and Beier Zhu and Qianru Sun and Hanwang Zhang},

year={2025},

eprint={2504.18397},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2504.18397},

}

Licence

Dataset is released under CC-BY-NC 4.0 License. Code is released under MIT License.